Intro

I joined a new fund earlier this year1. One reason why was because I have become obsessed with the future of the internet, which I believe is inherently tied to the future of the software engineer (SWE).

This got me thinking about how the internet runs today and how the constraints to its maintenance will impact software engineers (SWEs), who are mainly the architects and maintainers of the internet’s protocols (i.e. ~ instruction manual). Today SWEs prioritize writing, reading and testing code alongside documentation and quality control. Using tools like:

The operating system level where most SWEs prefer Windows, macOS or Ubuntu (a Linux distribution)

The Internal Developer Environment (IDE) where VS Code reigns supreme by over 73% utilization in the ‘24 StackOverflow Report

The asynchronous tools space where Confluence and Jira for collaboration are most widely used

The database level with 49% SWEs using PostgreSQL

The language of choice where JavaScript has consistently been the most widely used language since The Stack Overflow report started in ‘13, though Python usurped them on Github in ‘24

At the cloud infrastructure level where AWS is still the most used cloud infrastructure service, but both Microsoft’s Azure and Google Cloud have gained momentum since ‘23 with 24% of AWS users stating that they’d like to try Google Cloud in 2025 - redistributing market share

(tools a majority of engineers use to code)

This prioritization is changing though as new tools are consolidating and/or automating coding tasks. Leading the programmer to prioritize new things like code review and somewhat niche code language support. This shift is accelerated by the widespread use of similar tech stacks across the industry – when most companies use similar tools, it becomes easier to build automation solutions that work for everyone.

So change is happening quickly however, this evolution brings new challenges. In 2022-2023, we saw record numbers of security breaches, while continuous updates to AI and low-code/no-code platforms strain server capacity. These data points are in real time changing the roles of software engineers as they expand and contract to meet demand. Factors like internet privacy, automation and supply chain constraints have already changed the SWE - encouraging more to ‘shift left’, learn, review and debug with AI tools first instead of co-workers and more. How might these factors subsequently force the internet to evolve as well? Let’s first start with how the internet came to be…

Context…

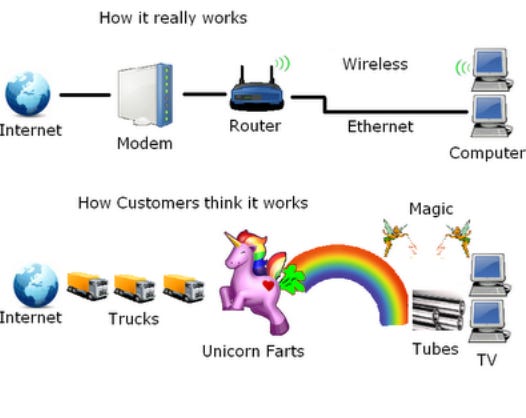

The internet runs because of the internet protocol (IP). IP addresses are unique identifiers for devices on the internet and allow for pinpoint message send and retrieval. This is because HTTP or HTTPS (both protocols - the latter encrypted) are designed to enable a ‘request and respond’ structure between the server and the user’s web browser of choice. Understanding how the internet works enables developers to identify areas of improvement. As the Picasso saying goes - “learn the rules like a pro so you can break them like an artist.”

Since 2010, the number of internet users worldwide has more than doubled, while global internet traffic has expanded 20-fold. According to the latest estimates, 328 million terabytes of data are created each day. Much of that usage is on multimedia platforms with 58% of all internet traffic coming from video streaming as of 2022. Another chink in the internet’s armour in regards to performance is server demand, in large part due to the increased utilization of artificial intelligence (AI) models (supervised and unsupervised, deep learning and ML) for everything from product discovery and search to digital task automations. In part, because internet server demand is only increasing, bringing more people online and/or keeping people online longer, we currently have major security and governance/maintenance challenges. For ex. DDoS attacks are at an all-time high and we still have no universal enforcing body for the internet outside of suggestions made by The Web Foundation. This has led to challenges in consensus, maintenance and accessibility of the public good. Presenting opportunities for nefarious actors to utilize the internet to the detriment of others.

Thesis

In order to protect against bad actors and to ease the burden on our internet protocol maintainers (who historically have been majorly SWEs), I believe we will either need to create an enforceable governing body for the internet or allow for a new structure of online communication. A new paradigm that does not rely on the assumption of good actors as stewards. The internet will either evolve to create and maintain equity in digital spaces or only help its users dive further into online silos specific to a user’s communities.

So how will that impact the SWE again…

If the latter happens the SWE will moreso have to take the role of the internet’s defender. With an increasing number of AI tools coming to the forefront to make‘anyone a developer’ an increasing amount of code is being produced and maintainers will need to be prepared to filter and proactively protect internet protocols from a larger number of potential malicious actors. SWEs will need to enhance their security knowledge and tools, forcing them to -take a zero trust approach. This will likely make code review and quality assurance a larger part of the day job, potentially fulfilling the "shift left" promise that is en vogue.

Looking forward, if software engineers are to remain crucial architects of our online future to help them tackle growing challenges, we must optimize their workflows to preserve problem-solving time. The role of the developer may evolve to be less manual as they focus their time more on research and problem solving while AI co-pilots and pair programmers become mainstays in the tech stack to write and maintain a codebase. The evolution of the software engineering toolkit isn't just inevitable – it may now be necessary to protect the internet as a free and public resource. By empowering developers with these new capabilities, we can better maintain the protocols that keep the internet accessible and safe for all users.

Lastly

To this end, what companies should be backed today? Some ideas:

Marketplaces of trust and reputation - to use the output from a model, especially at the enterprise level you first need to trust it or at least its inputs. A ledger graphically showcasing past and potential relationships/interactions would be helpful. Upon which a marketplace could be built.

Compute optimization tooling - utilizing known energy demand highs and lows to simulate data server capacity limits and provide cost efficient data storage and compute.

Companies that make it easy to protect code even in a staging environment, leaning into the shift left movement to incorporate security concerns and requirements earlier in the development process. One example is lcl.host which provides HTTPS in a developer’s environment

If you are brainstorming anything in relation to these ideas or have thoughts on my thoughts- let me know!

Flagging that this post only represents my thoughts not that of anyone else or any other entity